A few months ago, I read a great blog post by Iain McCowatt (@imccowatt) about “Models of Automation“.

The blog detailed a problem that Iain had witnessed between two of the teams that he was working with regarding automated testing – There was a lack of communication between the two teams and this was down to the different models the teams were using to write their automation scripts…

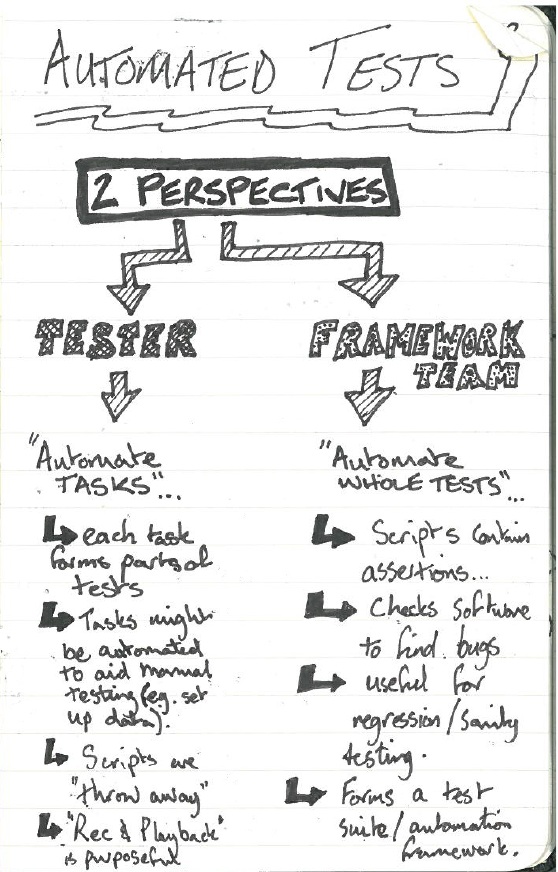

The framework team’s automated scripts were aimed at automating “whole tests”, whereas the test team’s automation was aimed at “tasks”.

I can relate to both models that the teams were using as when I first started learning and attempting to build automated scripts, I had the mentality of the test team in Iain’s examples, while I was learning from people who were coming from the other angle…

I managed to have many conversations and positive debates around the purpose of the scripts too, which lead me down the path of investigating the benefits of “throwaway” automation scripts!

I must admit, I am a bit of an advocate for having throwaway scripts, as I see them as a very useful tool in a manual tester’s arsenal.

Basically, throwaway automation scripts are automation scripts that don’t primarily focus on finding bugs… They might not contain any assertions at all. Or they might, but the point is that assertions wont be the main purpose of the script.

The main purpose of a throwaway automation script is actually for “test set-up”, where they allow you to get to a point where you are ready to test a lot quicker – be it to quickly inject some test data on a system for you to be ready to start testing, or just to perform a set of prerequisite actions that need to be performed to get you to the point that you are ready to test.

I also think that this is where the usefulness of various “record and playback” tools might come in… As we all know, record and playback tools are hopeless for building an automated framework, where tests(/checks) are the main purpose of the scripts. But “record and playback” scripts are perfectly adequate for throwaway script scenarios, where you want to create a script quickly for a purpose of simply mimicking performing the actions.

What do you think? What perspective do you have when writing automated scripts?

And have you ever used throwaway automated scripts?

To help conversations go more smoothly and clearly, I find it useful to break things down a little more finely and more clearly.

Testing—the process of evaluating a product by learning about it through experimentation, which includes to some degree: questioning, study, modeling, observation and inference.

Tool-assisted testing—any use of computing tools, hardware and software, to support testing.

Automated checks—using computing tools, hardware and software, to make evaluations by applying algorithmic decision rules to specific observations of the product.

Test automation—Tools and technologies used in tool-assisted testing or in automated checks.

So,

– “Automated testing” doesn’t exist, as such, since machinery can assist a human who is questioning, study, modelling, observing, inferring and so forth, but machinery cannot perform any of those actions.

– Automated checking, by contrast, does exist, since machinery can perform algorithmic actions.

– Using “throwaway automation” is an example of using tool assistance in testing.

– The stuff that the framework team did was automated checking.

—Michael B.

LikeLike

Hey Michael, thanks for the comment!

I agree with your definitions and agree with the fact that automation is checking rather that testing – I do try to be an advocate of highlighting this whenever possible!

I think when it comes to the terminology of “automated testing”, I tend to use it in the same meaning as you defined “test automation” where I’m talking about the technology… Is that wrong? And if it is, then wouldn’t the term “test automation” also be inconsistent then?

Part of the confusion is that the terminology of “automated testing” has been used in reference to the technology since automation has been available. If only people had used the term “automated checking” back then instead we might not have such a terminology mess to contend with! 🙂

LikeLike

Hi Danash,

Nice post,

I think many use “Automation” while missing the distinction between the 2 main roles underneath it: Infrastructure developers, and Script writing/execution/investigation (one by developers, the other by testers).

As to Tool-assisted testing – a practice I found important, is adding Semi-Manual GUI to the Automation Framework,

This allows easily using same infrastructure for both full-blown automation, as well as ad-hoc assistance to manual work,

while relieving the manual tester from the need to restart these heavy frameworks again & again per each step, as they reside in background, and dedicated tasks are invoked when needed from the GUI.

@halperinko – Kobi Halperin

LikeLike

Very good post about Automation testing.

LikeLike